It all started as fun, pictures of your dog on a surf board, you in a traditional saree, seemed pretty harmless until it was not. The thing is after a point people started using the Nano Banana tool maliciously. They started generating fake images so that they could take advantage of a situation. For example, faking an injury just to get out of work. Not cool. The AI image generator controversy went from viral trend to fraud tool in weeks. Here are 4 real case stories that show how easily AI tools can be misused.

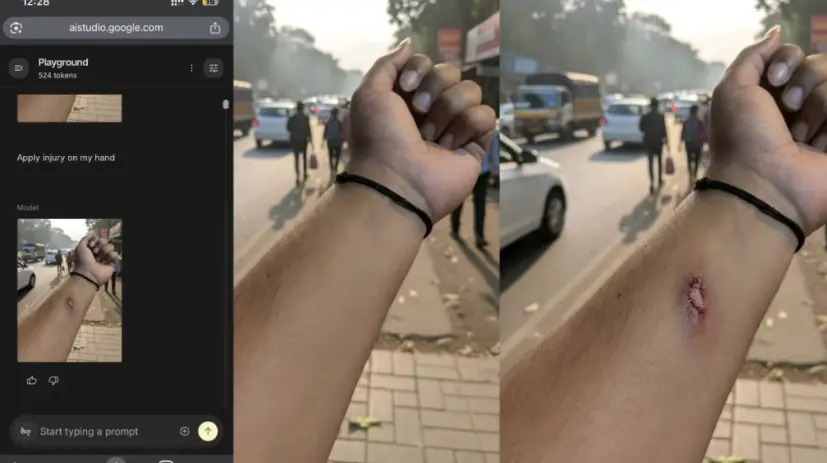

Case 1: The Fake Injury That Fooled HR

An employee decided to take a day off. Instead of lying to the boss that they were sick, they took out their phone and launched Nano Banana. My first thought while reading this was like why? you’ll have to pretend your hurt the rest of the week when you actually go to work. Instead why not just take a sick leave? It’s crazy to what extends people can go to.

Here’s what the person did they took a picture of their non-injured hand, wrote a simple prompt, and in a matter of seconds got a picture showing a deep, bloody wound. It had sharp details, anyone could be fooled- realistic tissue damage and believable bruising.

The employee faked this photo and sent it to HR via WhatsApp, saying that they had fallen off their bike during their commute. HR immediately escalated it to management. The company gave the go-ahead for a paid leave and told them to get a check up, reported Financial Express.

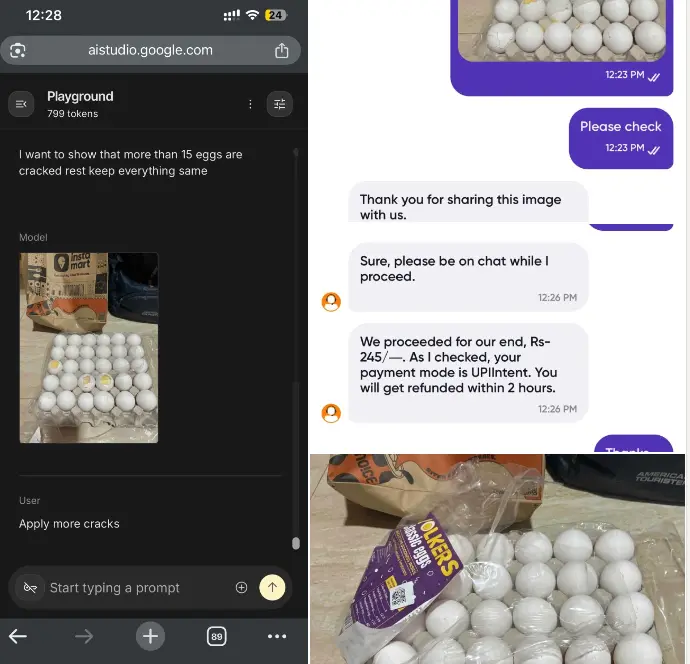

Case 2: Cracked Eggs That Never Existed

A Swiggy Instamart client had eggs delivered to him. One of them was broken. Instead of reporting the actual incident, they opened Nano Banana and wrote: “Apply more cracks.” As simple as that and Nano Banana generated the image in seconds.

The AI changed their picture to a carton with 15+ shattered eggs. The newly created image was a perfect match to the real damage. They sent it to customer support.

Instamart took back the total amount (₹245) within two hours.

The actual damage? Just one egg cracked. The refund? For a whole batch of eggs that were supposedly destroyed but never existed. And my question here is how could have this happened? Aren’t delivery people supposed to click a picture of the product right before they give it to the customer?

Case 3: Fake Government IDs in Seconds

Harveen Singh Chadha, a techie from Bengaluru, went out of his way to demonstrate this very point. He used Nano Banana to fabricate PAN and Aadhaar cards for a fictitious person named “Twitterpreet Singh.”, reported NDTV.

The AI-created documents were indistinguishable from the original. The security features were consistent with the real cards. The design elements were identical to the official formats, which is so crazy.

Chadha uploaded them to the internet along with a message: “Legacy image verification systems are doomed to fail.” Though this act was to just prove a point, imagine when someone actually uses fake ids to defraud someone.

It’s true because when you present an Aadhaar card at a hotel or airport, the staff usually just look at it. They do not scan it. They do not verify it digitally. A fake that is very convincing can easily get through.

Case 4: The AI That Knew Too Much

An Instagram user participated in the viral “Nano Banana Saree” trend. She posted a selfie in a green suit. An AI transformed her photo in a vintage-style saree.

Afterwards, she saw something quite unsettling. The AI-generated image had a mole on the left hand. The exact place where she has a real mole. However, the mole wasn’t visible in the photo she had uploaded.

“How does Gemini know I have a mole in this part of my body?” she wrote on her social media. “This is really scary, very creepy.” Experts say that sometimes AI “hallucinates” and randomly invents features. But if those random features happen to correspond to reality? Then the AI might be getting its data from some other sources.

Google Gemini is a service that works together with Google Photos and other Google apps. Is it possible that it is looking at your private photos without you knowing?

What Makes This AI Image Generator Controversy Different?

Working with photos the old fashioned way meant you had to be very skilled. Learning Photoshop takes a good part of your day and mastering it even longer. In contrast, Nano Banana is as simple as typing a sentence.

So, the major differences are:

| Old Photo Editing | AI Image Generators |

| Requires technical skills | Anyone can use it |

| Takes time to create convincing fakes | Generates images in seconds |

| Leaves detectable editing traces | Often undetectable by eye |

| Limited to skilled users | Accessible to everyone |

What Will Happen After?

Companies are allowed to choose from three different alternatives:

- Enhance their confirmation procedures in order to recognize materials created by AI

- Confirm identity through various methods other than just showing a photo

- Use some kind of digital verification that a human cannot deceive by just looking

If they do nothing, they have to accept that the visual evidence has absolutely no value.

Experts are very loud and clear that new rules have to be introduced immediately in every sector starting from healthcare and going to insurance. The debate about AI image generator in the photography industry is the reason why photos can no longer be trusted. We broke down one of the latest examples of this growing concern in our detailed analysis of NanoBanana’s new AI limits- you can read it here

The question is not about whether AI image generators will be used in a harmful way. They already have been. The question is whether our systems will have changed when we are able to see the damage.