CES 2026 demonstrated how physical AI can be integrated into our daily lives.

CES 2026 showcased physical AI as an enhancement to everyday life rather than simply as technology. People were able to interact with robots who help fold laundry, carry things up and down stairs, welcome guests, be a friend like a seasonal animal etc.

Physical AI refers to artificial intelligence based on intelligent movement, touch, sound, and interaction with the physical environment rather than technology based on the ability to talk or write (or type). Physical AI may walk about, perform cleanliness functions such as sweeping up after people, as well as provide companionship via comfort and also allow for experiential learning.

For many participants at CES 2026, they entered CES expecting to see improvements in cell phones, laptops, etc., but they left CES with experiences regarding robot helpers (e.g., like a dog), emotional companions, machines offering more capabilities than simple tools. In many cases, participants probably did not feel the experience of sitting down with friends. Instead, there was an experience of the two-way intensity of technology and physical AI, including sadness or even happiness. An emotional shift that reflects the kind of immersive, human-tech interactions increasingly showcased at major events like CES 2026.

Physical AI transitioned into the real world from CES 2026 events.

From screen-based AI to physical AI

The transition from digital AI to physical AI has been a gradual one; however, by the end of CES 2026, it became evident that there was an entirely different journey for this category of AI. AI that was traditionally accessed via your screen (through text or images) has been transformed into a physical AI.

Physical AI includes robots that no longer require an individual to program them in a step-by-step fashion, reported Mastercard. Instead, these robots can use their video, sensor, and experiential data to learn to react to and understand what is taking place around them. They can learn about people’s intent and perceptions as well as adapt to change as required.

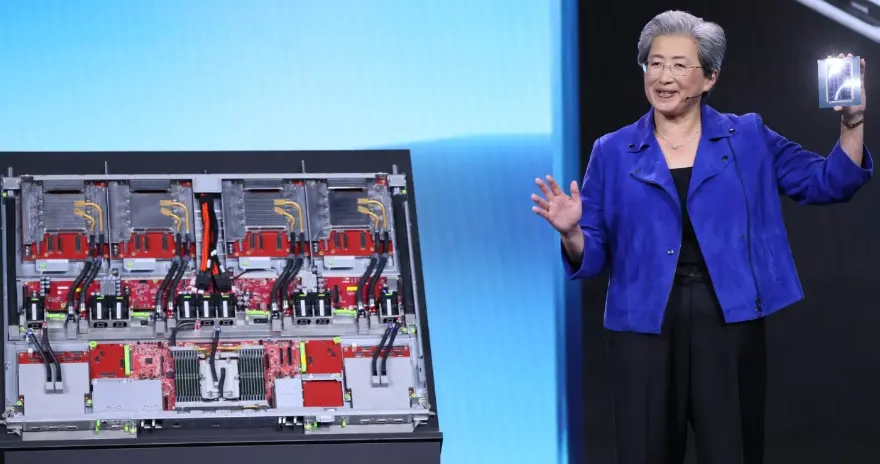

This transition occurred because three key elements combined to create the final opportunity for robots to be capable of learning rather than just following prescribed rules:

- Faster chips that were created specifically for use in real-time decision-making and movement.

- Improved vision and motion models that have been developed to create a more robust understanding of how space operates.

- Massive training systems that have been created for robots through both real and simulation-based data sets.

At the conclusion of CES 2026, robots evolved from simply acting according to a predefined set of rules and began to have the capability to learn. This was the main focus of CES 2026.

Robot helpers are finally useful

Robots that are actually beneficial and practical are the most impressive robot designs. The best of the robots are practical and do not rely on an animated or human-like qualities for attractiveness.

A superb example of a practical robot is the Stair Climbing Robot Vacuum Cleaner. It may seem like a simple concept, however it solves a major issue with traditional robot vacuums (which only work on flat flooring) and has the ability to traverse the stairs using articulated legs, creating a 3D layout of the environment it is cleaning and adapting its method of cleaning the stairs in real-time.

Robots have also developed for kitchens and laundry rooms. Some robots are designed to pick up household items, while others can fold towels or load the dishwasher.

In terms of speed, the majority of robots are not built for speed or performance. However, they do work! Thus, the major evolution of robots is that previous versions (“cool” versions) didn’t work in real household settings; whereas the newest generation (“practical cleaning assistants”) can be used successfully in a household setting due to their slow pace, precision, and dependability.

Reliability, not speed, is the primary goal of physical artificial intelligence.

AI Companions are becoming Emotional, not just Smart

The most popular products at this year’s event were not used for work but as a form of companionship. One company introduced an AI pet that evolves as you interact with it. The pet learns to recognize your voice, develops its personality, and responds emotionally to your feelings.

Another company created a robot dog targeted at seniors. The robot dog responds positively to physical touch, wags its tail, makes soft sounds, and recognizes and reacts to voices. These products were not toys; rather, they were emotional support devices.

While these products may entertain users, their primary purpose is to provide emotional comfort.

Research indicates that loneliness is prevalent in older adults, and not everyone has the ability to provide care for a live animal. Robots may serve as an alternative method of connecting with others without the associated responsibilities of owning a live animal. However, these products raise the question of whether building emotional connections with a nonliving object is beneficial and whether that attachment replaces the need for connections with living people. Nonetheless, these robots offer a viable option for addressing the need for human companionship in a society where many individuals do not have access to 24/7 live human care.

Physical forms of AI can address the need for connection.

What exactly can physical AI do right now?

These systems have already shown proficiency in the following areas:

- Basic cleaning and organisation

- Identifying and retrieving objects

- Navigating around the house

- Communicating via voice

- Displaying basic emotions

- Remembering how the user uses the system

They cannot think for themselves and they are not completely autonomous; however, they can consistently produce these outcomes. This consistency is what makes them such a valuable asset.

How does physical AI change daily life?

| Digital AI | Physical AI |

|---|---|

| Lives on screens | Lives in real spaces |

| Responds to text | Responds to movement |

| Gives answers | Takes action |

| Feels abstract | Feels present |

| Easy to ignore | Hard to ignore |

What is going to come next for Physical AI?

Analysts agree that one thing is certain: Physical AI will experience rapid growth.

What we can expect in the next two years:

- Lower-cost home robots

- Improved safety systems

- Increased speed for learning models

- Enhanced privacy protections for users

- Enhanced emotionality in designs

However, this also creates a great deal of tension among users.

Some of those tensions include:

- Concerns about surveillance (in-home)

- Concerns about job displacement

- Concerns about over-reliance

- Concerns regarding emotional attachment

- Concerns regarding data misuse

All of these concerns are significant.

Due to how Physical AI interacts with human life at such an immediate level, it does not hold up under the same rules that apply to traditional software.

Wrapping it up

Physical AI is no longer just an idea, but rather, at CES 2026, it is now considered an actual product category that exists. As of today, these products currently do not come without their flaws, nor are they available for purchase at affordable prices; additionally, the amount of work or effort needed to get or use them may also be greater than most consumers would anticipate.

However, while these things may hinder consumers from purchasing, they do offer a different type of experience than one might expect from typical AI products.

The biggest difference between physical AI and traditional AI is that they offer a level of companionship. A person would not simply be a user of this type of AI; instead, they are able to form a stronger bond than simply being an operator of it.