AI tools are rapidly becoming integrated into various aspects of business operations such as customer support, internal documentation, sales enablement and training via the use of chatbots powered by algorithmic training on proprietary data. With this shift comes a fundamental issue a critical question arises “Is your data secure?”. So where does AI data security come in as a criteria for evaluation – is it an additional benefit?

CustomGPT.ai claims to be a business-ready platform with strong privacy and security features, however there may be limitations to how well it protects confidential business information.

Let’s look into this further.

Why AI Data Security Matters for Businesses

By uploading data to an AI system, you are usually sharing internal documents, customer communications, policies, or information about your products. If any of this data becomes public, gets mixed in with other users’ records, or is used again to train future systems, there is a real possibility that the information will no longer be secure.

The fundamental questions concerning safe and secure use of AI data include:

- Who has access to it?

- Where is it located?

- Is it shared with other parties and reused in any way?

- Can it ever be permanently deleted?

- Is it donor compliant?

At CustomGPT.ai , this has been a large focus for our users as well as for us.

How CustomGPT.ai Handles Business Data

CustomGPT.ai is a no-code platform that allows businesses to train AI chatbots based on their own content – documents, sites, PDFs, and internal knowledge bases. It also maintains a privacy-first approach to security; uploaded user data is not used for training any public models.

Each chatbot is completely isolated and access to individual chatbots is controlled.

This puts Custom GPT significantly ahead of most generic AI tools on the market.

GDPR and Privacy-First Design

Custom GPT is compliant with GDPR regulations for the handling of private and commercial information. The organisation promotes transparency, user consent and control over the use of collected data.

Users may determine what data they send to us, how long they want the information to remain active and when they would like it removed from our servers. User data is processed under limits with no data being stored by default or used for purposes such as training or reselling.

Despite CustomGPT employing U.S.-based cloud computing services, we apply GDPR guidelines during the data management and processing phases.

CustomGPT meets business privacy expectations for global operations.

Private by Default Chatbots

A significant strength of CustomGPT’s AI Data Security program is that all chatbots are set to private, by default.

What This Means:

- Unauthorized users cannot access the chatbot.

- Public access must be explicitly turned-on to be accessed publicly.

- Internal chatbots only stay internal unless they have been shared publicly.

- Uploaded files can be downloaded from the chatbot but they will not be stored permanently unless you decide to keep them for future use. Preventing sensitive uploaded files from remaining in the system for a long period of time reduces the amount of time that sensitive files will remain in the system.

- Chat logs have been anonymized as well. Individual chat log responses and queries are anonymized and cannot be traced back to individual users which provides protection at the identity level for the Privacy of User Information.

Secure Infrastructure and Trusted Vendors

Well known providers like AWS are used in CustomGPT. Vendors like Stripe set best practices that CustomGPT also follows.

Reduced risk is always in association with using mature cloud providers. They invest large amounts in redundancy, monitoring, compliance, and physical security. Although no system will ever be flawless, the use of mature cloud providers can help decrease risk compared with self-hosting and unproven solutions.

Encryption in Transit and at Rest

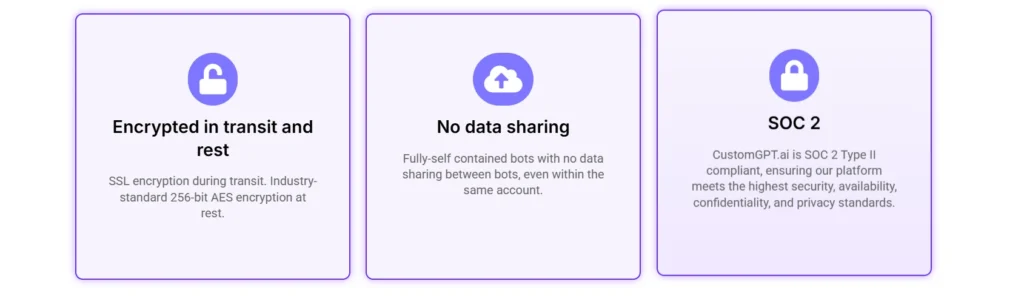

At every phase of processing, CustomGPT encrypts all data using established, secure methods.

SSL encrypts data while it moves to/from servers

Moreover, AES (128, 192, or 256) is used to encrypt all data stored on disk/media when it is not needed, thus securing documents, requests, and responses provided by the chatbot both when being uploaded to the server, processed by the server, and/or stored.

For companies storing internal documents or customer data, this should be considered a standard requirement and not an optional feature.

No Data Sharing Between Bots

An important security feature which is often forgotten about by most users is the fact that all of the data generated through any one CustomGPT will not impact or be mixed with another person’s experience even if they are in the same account.

This is particularly beneficial for:

- Agencies that have multiple clients,

- Companies that consist of several departments,

- Consultants that are managing numerous different projects.

There is no internal data sharing between bots, therefore reducing the potential for accidental leakage and cross contamination of sensitive information.

SOC 2 Type II Compliance Explained Simply

The company CustomGPT.ai has achieved compliance with SOC 2 Type II, which is an important measurement of security readiness. SOC 2 is not simply assessed at a single moment in time but rather that security controls are effective and managed over time through ongoing renewal.

SOC 2 Type II includes five criteria of assessment which are as follows:

- Security

- Availability

- Confidentiality

- Processing Integrity

- Privacy

For many mid-size and large companies, SOC 2 compliance is the minimum requirement before adopting any new SaaS product that can access internal data.

This certification enhances the credibility of the security assurances made by CustomGPT.

Where the Limitations Appear

CustomGPT is not a universal solution to every compliance need, even with its robust foundation for data protection through AI technology.

There are several considerations to keep in mind.

The first consideration is that, at this time, CustomGPT does not offer an option for data to store in the EU (European Union). Therefore, if your organization requires that data to store in the EU due to rigorous regional regulations.

Nevertheless, CustomGPT does not have any specific contract or provision to support HIPAA (Health Information Portability and Accountability Act) compliance. Thus, if your organization manages any type of protected health information (PHI), this may hinder your ability to use CustomGPT for this type of information.

In addition, CustomGPT has recently introduced a two-factor authentication feature; however, some companies may require more advanced identity management controls (e.g., multifactor authentication or deeper SSO options).

Furthermore, OpenAI may retain any data processed using CustomGPT via the API for up to 30 days, either due to legal requirements or quality assurance, but does not retain the data after 30 days. However, if you have an organization’s requires zero external data storage, this may be an issue for you.

Is CustomGPT Safe Enough for Sensitive Business Data?

Many companies do say that CustomGPT provides excellent AI data security using encryption and isolation, privacy controls, and compliance standards such as SOC 2 Type II and adherence to GDPR. It does not utilize any customer data for training and separates chatbots by design.

Nevertheless, if your company needs:

- the capability of data residency only within the EU

- compliance with HIPAA

- complete on-prem deployment for your application

- non-retention of customer data by any third party

then you will likely want to investigate other specialized enterprise solutions for your needs.

Wrapping It Up

If you’re looking for an AI chatbot to help your business, then CustomGPT.ai is a great place to start. It has a strong privacy-first focus, which means that it provides an environment where data protection is paramount. There are also many features that make using CustomGPT safe for use cases ranging from internal communications to customer service.

When it comes to AI data security, there are no perfect solutions. Instead, creating a secure environment with AI means controlling the risks associated with doing so. Many teams find CustomGPT to be an effective and sensible balance of usability and security.